LLMs are remarkably good at explaining things. But there's a fundamental limitation: the model is stateless. Every conversation starts from zero. It doesn't remember that you struggled with eigenvectors last week, or that you learn best through geometric intuition rather than algebraic manipulation.

This statelessness isn't just an inconvenience—it cripples the model's ability to teach well. Good tutoring is fundamentally about sequencing: knowing what to teach next given what the student already knows. A great tutor doesn't just explain concepts clearly; they identify precisely where your understanding breaks down, trace that breakdown to its root cause in some earlier misconception, and construct a path that builds from stable ground. None of this is possible without persistent memory of the student's knowledge state.

Emma Brunskill's lab at Stanford has spent years formalizing this insight. Her work on Deep Knowledge Tracing showed that modeling a student's knowledge as a dynamic state—updated after each interaction—dramatically improves prediction of what they'll struggle with next. More recent work on Educational Knowledge Graphs represents knowledge as a graph structure, where concepts are nodes and prerequisite relationships are edges. Students who follow paths respecting these dependencies consistently outperform those who don't.

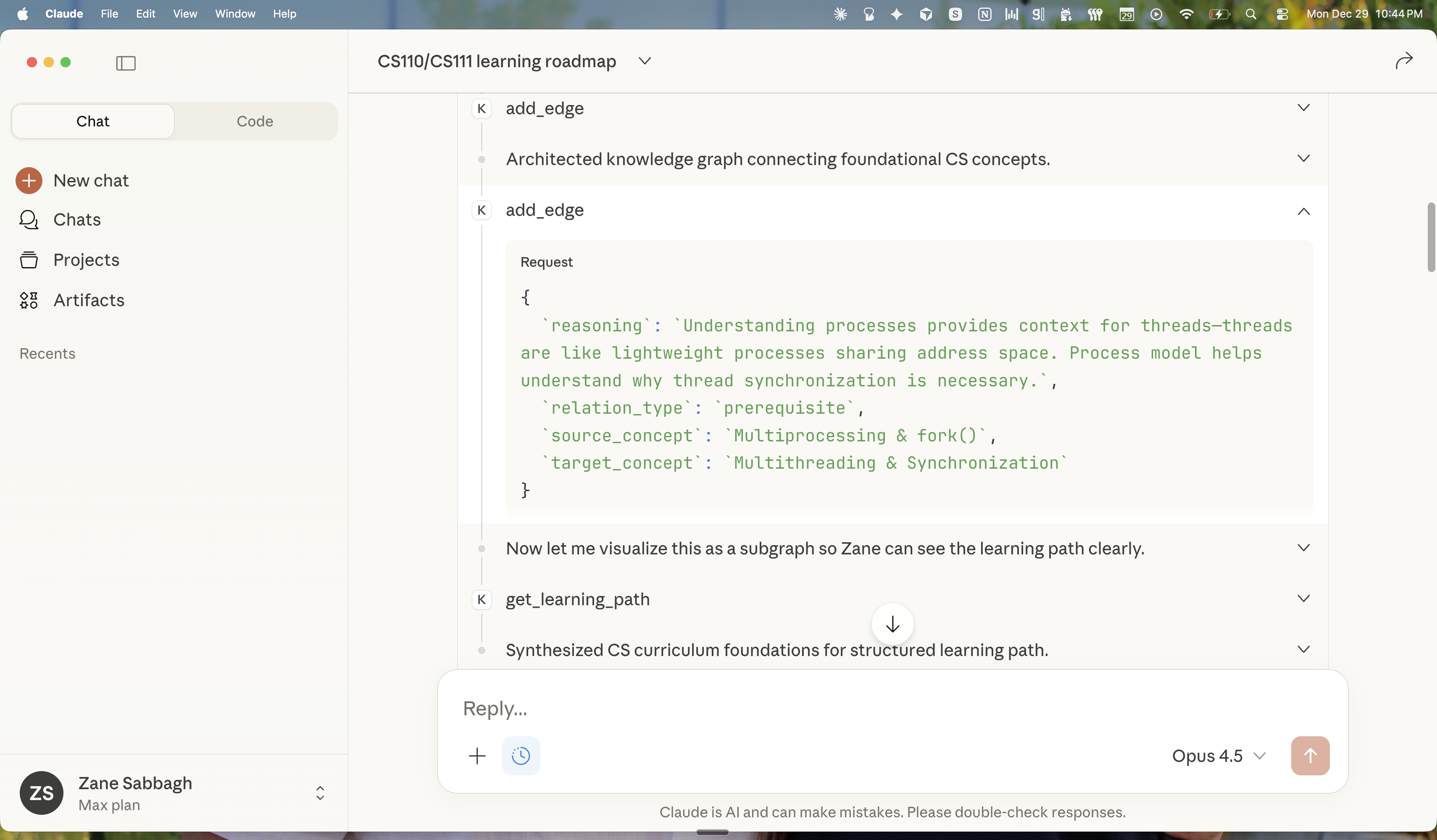

The Knowledge Graph MCP Server gives LLMs this missing infrastructure. It provides a persistent, structured representation of what each student knows—not as a flat checklist, but as a graph that captures how concepts depend on each other. The LLM can query this graph to find knowledge gaps blocking progress, identify concepts the student is ready to learn, and detect where misconceptions might be cascading through dependent material. The model gains the ability to reason about your learning, not just learning in the abstract.

The system tracks mastery across three dimensions: recall (can you retrieve it?), application (can you use it in new contexts?), and explanation (can you teach it?). This granularity matters. A student might recall a formula perfectly but fail to apply it to novel problems—a pattern invisible to single-score mastery models but obvious here. The LLM can now target remediation precisely: not re-teaching the whole concept, but specifically drilling the application dimension while leaving recall alone.

Claude synthesizing a CS curriculum learning path via the MCP server